Abstract

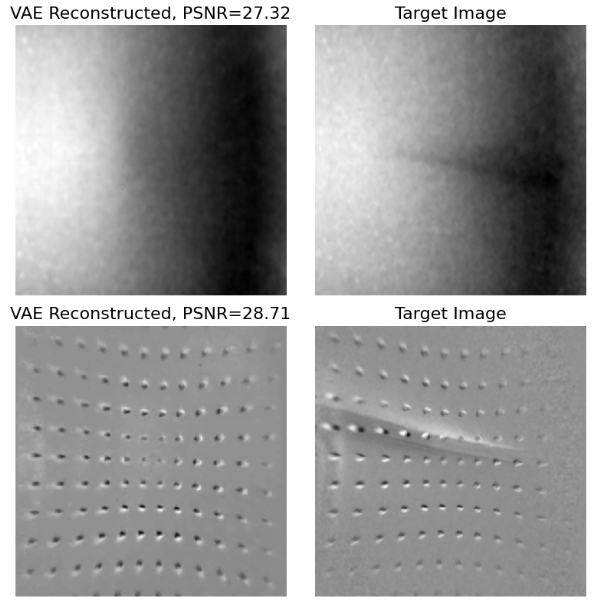

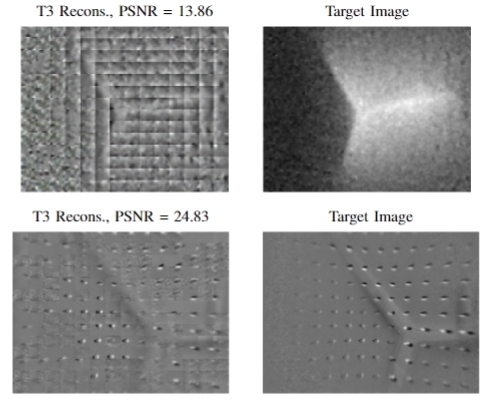

Modern incarnations of tactile sensors produce high-dimensional raw sensory feedback such as images, making it challenging to efficiently store, process, and generalize across sensors. To address these concerns, we introduce a novel implicit function representation for tactile sensor feedback. Rather than directly using raw tactile images, we propose neural implicit functions trained to reconstruct the tactile dataset, producing compact representations that capture the underlying structure of the sensory inputs. These representations offer several advantages over their raw counterparts: they are compact, enable probabilistically interpretable inference, and facilitate generalization across different sensors. We demonstrate the efficacy of this representation on the downstream task of in-hand object pose estimation, achieving improved performance over image-based methods while simplifying downstream models.

Results

Authors

*: We use dataset from Touch2Touch.