Abstract

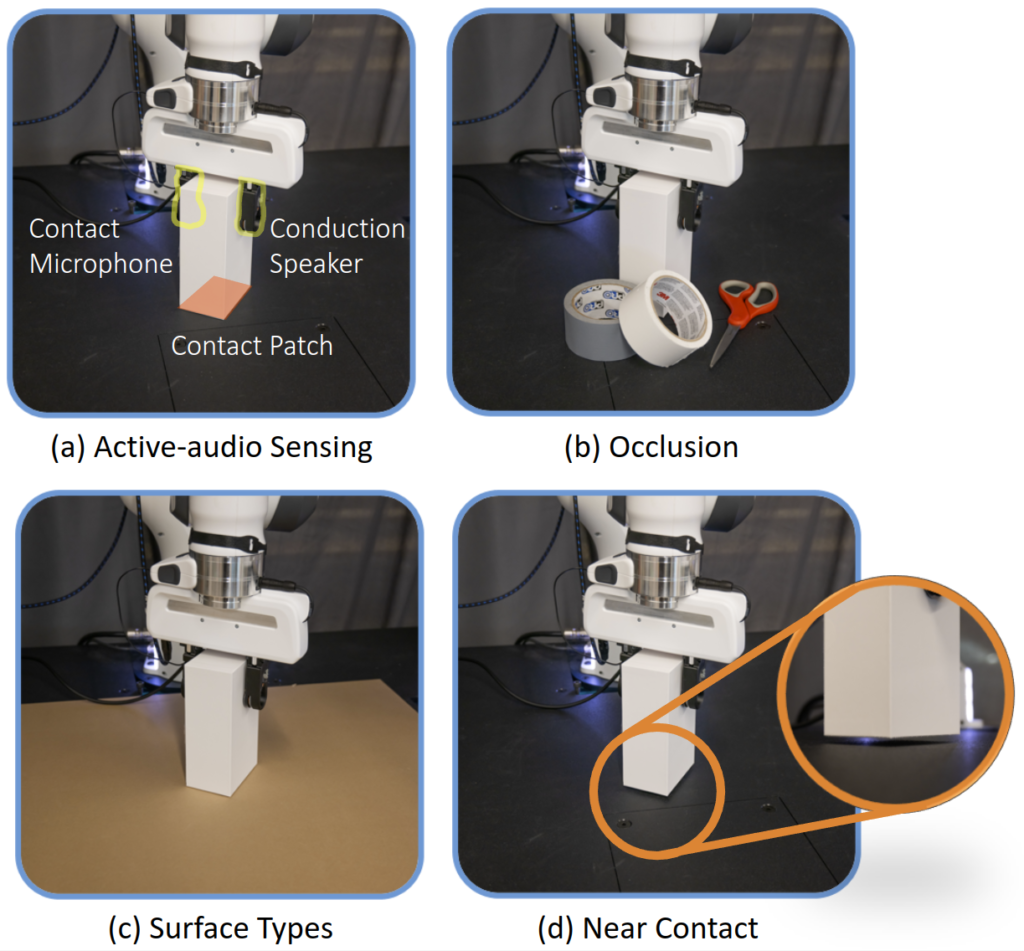

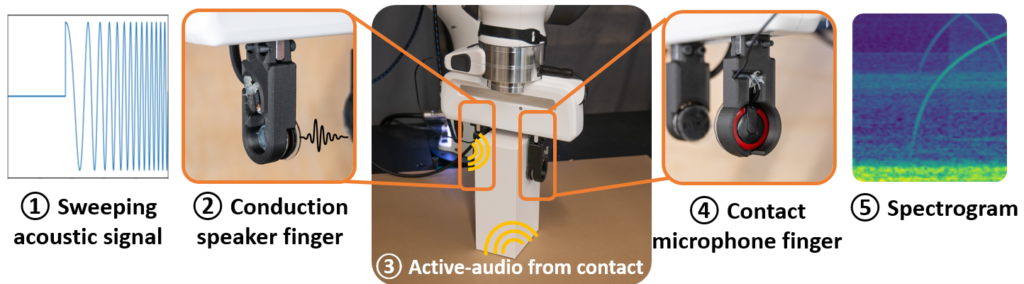

Estimating contact locations between a grasped object and the environment is important for robust manipulation. In this paper, we present a visual-auditory method for extrinsic contact estimation, featuring a real-to-sim approach for auditory signals. Our method equips a robotic manipulator with contact microphones and speakers on its fingers, along with an externally mounted static camera providing a visual feed of the scene. As the robot manipulates objects, it detects contact events with surrounding surfaces using auditory feedback from the fingertips and visual feedback from the camera. A key feature of our approach is the transfer of auditory feedback into a simulated environment, where we learn a multimodal representation that is then applied to real world scenes without additional training. This zero-shot transfer is accurate and robust in estimating contact location and size, as demonstrated in our simulated and real world experiments in various cluttered environments.

Video

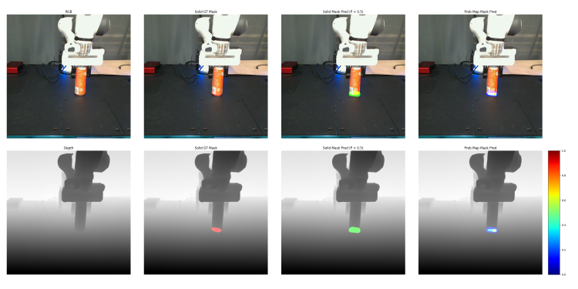

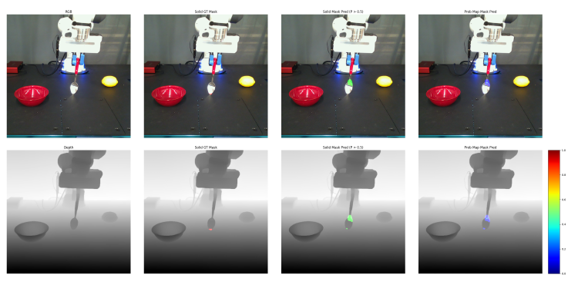

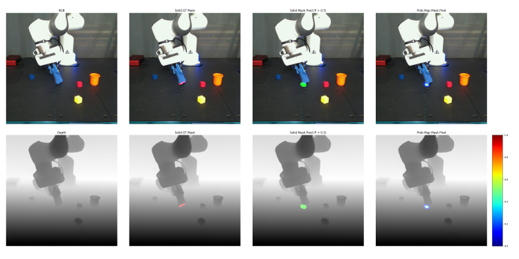

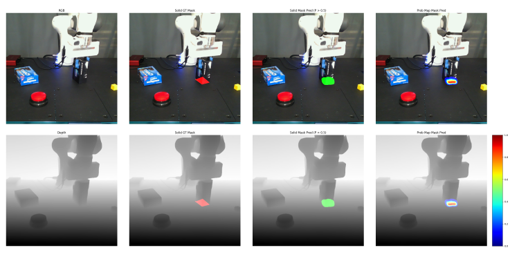

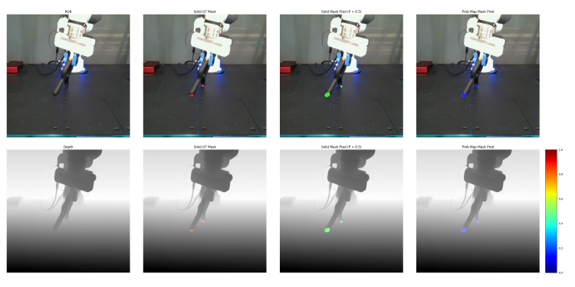

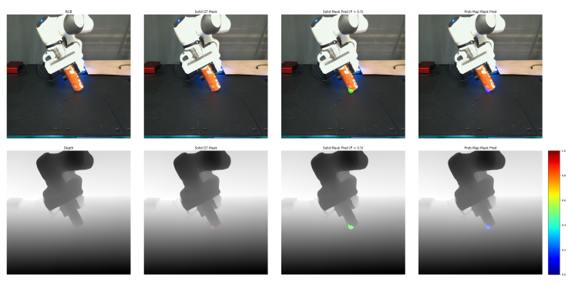

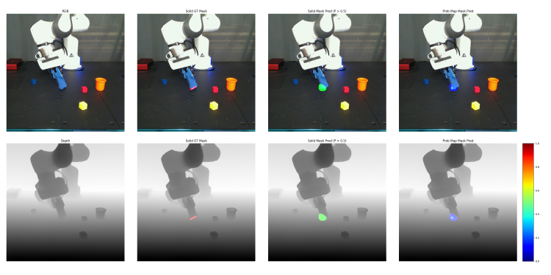

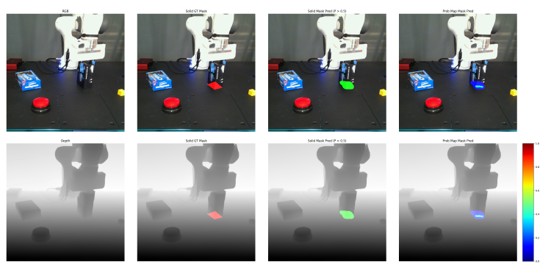

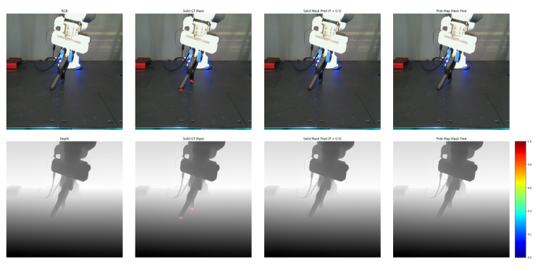

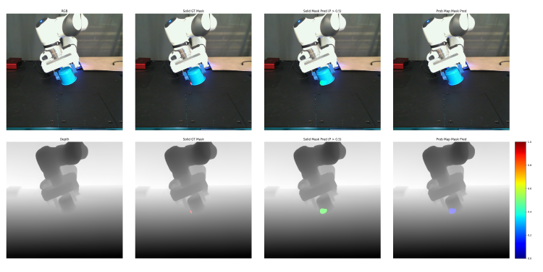

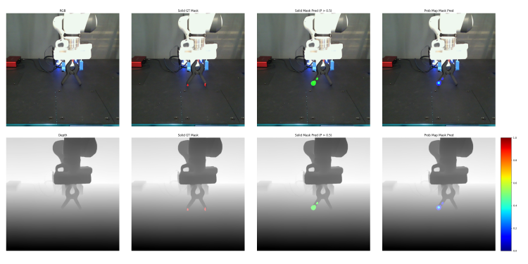

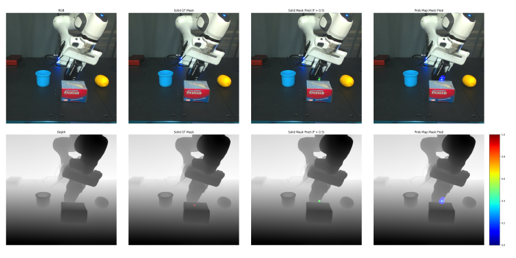

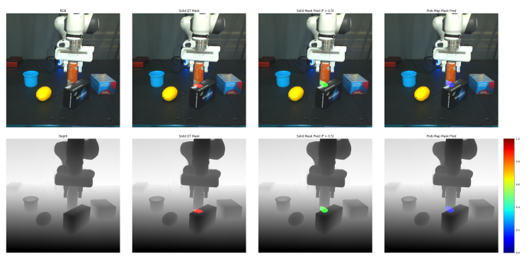

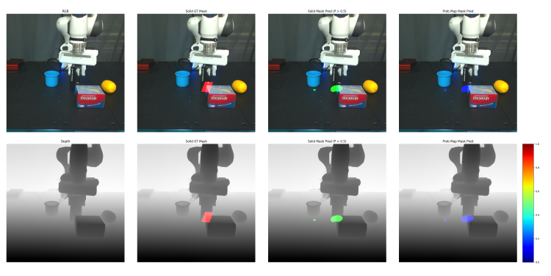

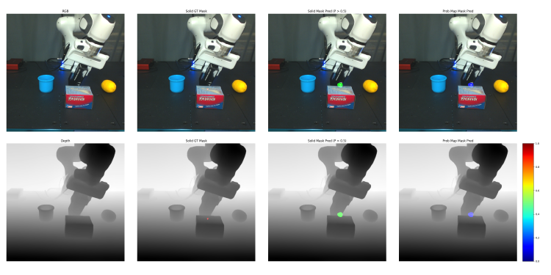

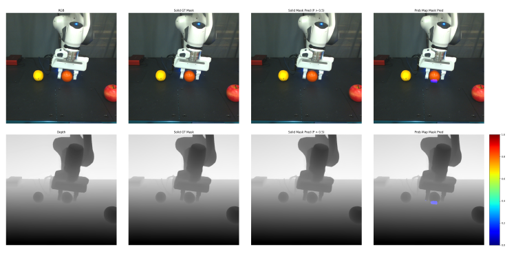

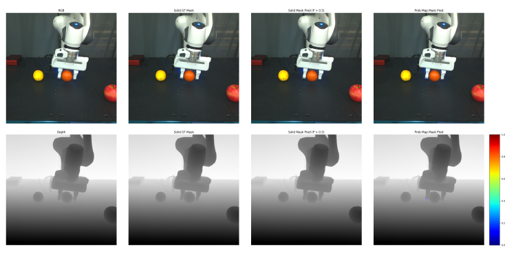

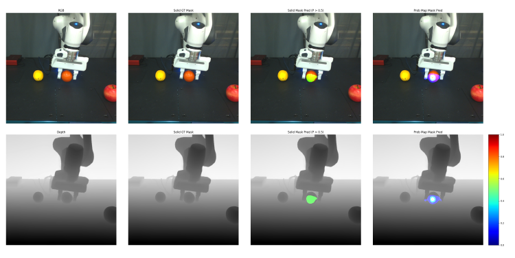

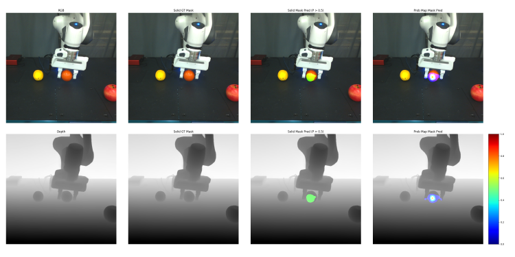

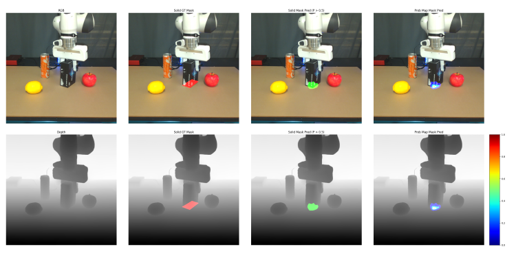

Results

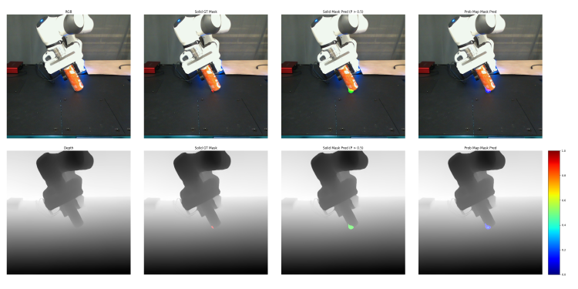

- General cases

Our model:

w/o audio:

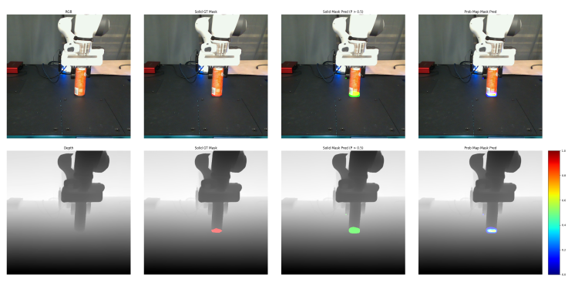

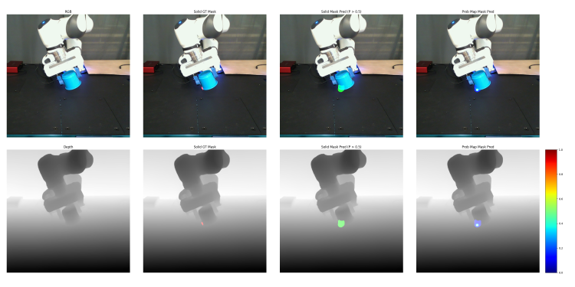

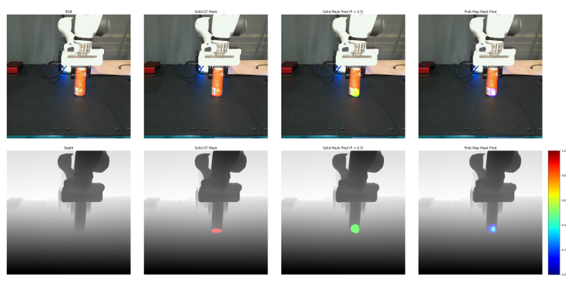

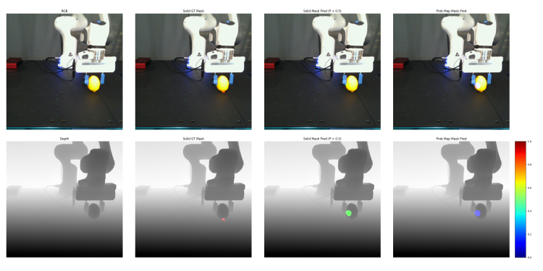

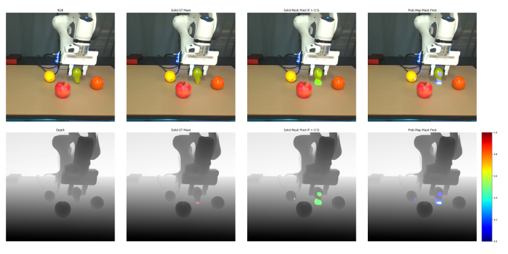

- Occlusion

Our model:

w/o audio:

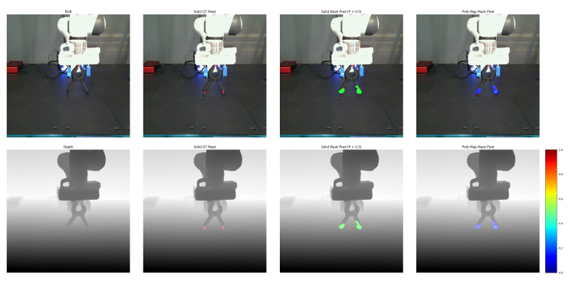

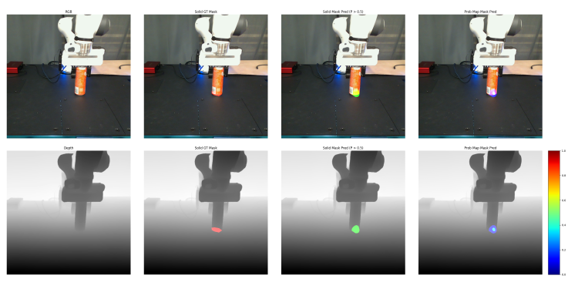

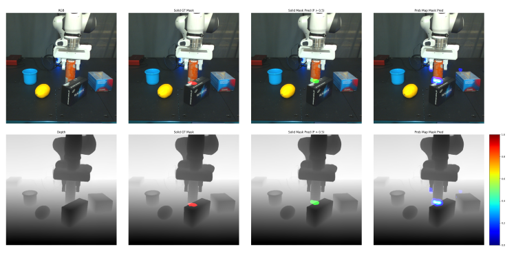

- Near contact

Our model:

w/o audio:

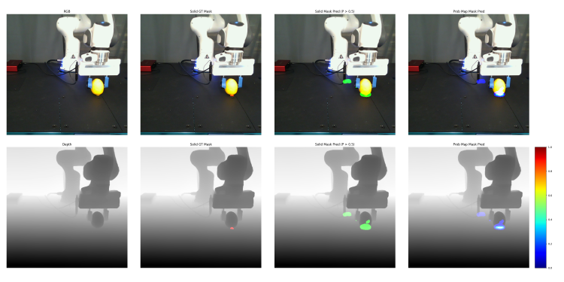

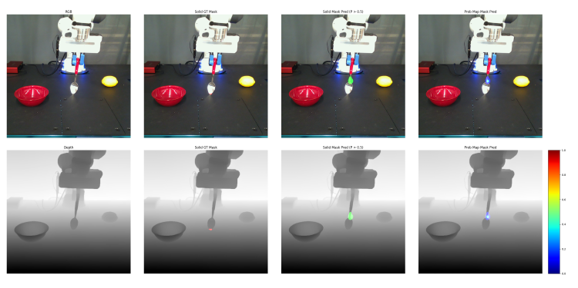

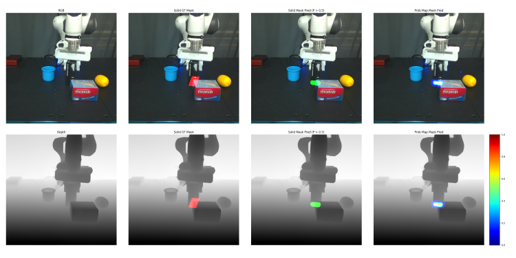

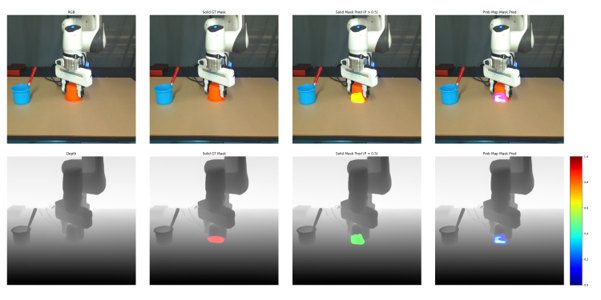

- Different surface

Our model:

w/o audio: